Tapioca Toys Cardboard is our contribution to demonstrating how the powerful little devices that we have in our pockets can be used to feel, understand and think the World around us differently. It's magical. It's fun to build. It's cheap. It's open source and it makes interfacing human expressiveness with machines easier than ever. This is how we define our Tapioca Toys Cardboard, the main lab project we worked on this year.

Where does this come from?

Right, first things first. How did we come to designing a tangible interface where one uses tapioca to control an iPhone? And why is it made out of cardboard? Seriously, how can you play music with a “controller” that is so unpredictable, never looks and never feels the same?

Thinking beyond the flatness of screens

At User Studio we are a bunch of designers with a strong will to think beyond the flatness of screens. Don't get us wrong though: we love screens and what we can do with them (especially when they're interactive). But we believe that as designers, if we want to create the strongest experiences possible, we need to further investigate the idea of interaction itself: it does not just sum up to clicks, keyboard shortcuts, taps or even multiple touches.

we believe that as designers, if we want to create the strongest experiences possible, we need to further investigate the idea of interaction itself

And by the way that's a revolution that's already on. Take video games, virtual reality or any other kind of activity that involves interacting with machines with somewhat uncommon, non-standard interfaces: they go beyond the common interfaces such as the mouse, keyboard, touch screen, joystick, car wheel. The Wiimote, the Kinect are perfect examples of that idea of a renewed relationship between humans and machines applied to consumer electronics.

A little bit of history

Cardboard is not our first shot at tangible interfaces… and at tapioca. We had a version for the iPad that went viral in 2014, and at the end of last year, at the Centre Pompidou (Paris), we've introduced a multiplayer/performance edition, as well as a fully autonomous product:

It had all started off with a discussion we had with composer Roland Cahen and researcher Diemo Schwarz (ISMM, Ircam): can there be an interesting way to interact with thousands of tiny visual and audio grains at once? Is there anything else that we can use beyond the mouse to handle particles? If there was an interface to do that, how would it work?

An exciting challenge for designers. Analyzing the idea, we realized we were looking to create and build real-world interfaces:

- like in the real world, they would be so nuanced that you could never cancel an action, go back to a previous state;

- and like in the real world they would be immensely expressive rather than discrete, binary; gestural-minded rather that reproducibility-obsessed;

we were looking to create and build real-world interfaces

We translated this into the concept of Dirty Tangible Interfaces — and wrote articles about it for the NIME 2012 and CHI 2013 conferences — and started looking for the best way to turn it into reality: it could just as well be a ball of fur with hundreds of thousands of hair as it could be a sand bowl with millions of little rocks. We set off on the bowl solution because we had an "easier" technical resolution in mind. Filming and analyzing the amount of light going through a semi-transparent material such as sand or tapioca dunes would only require a camera and computer vision software. The Tapioca Toys were born.

Low tech, low cost, low hassle

That's the whole idea behind this Cardboard version: making it easily accessible to as many users as possible.

Up until now, our Tapioca Toys were hard to build and relatively expensive. Amongst many other things, to build the previous, Autonomous version we'd need to get electronic parts, spend time soldering, installing our software… With the Cardboard edition it's now a matter of five minutes: installing apps from the App Store and building the kit that's made out of cardboard (and plastic mirrors). And it's ready to play.

State, Movement and Blobs

There are three main things that Cardboard lets our apps acquire in terms of interaction data:

- state as in tracking the current state of the material all over the interface

- quantity of movement as in calculating the quantity of movement in every point of the interface

- motion blobs as in finding the specific points/areas where movement is happening within the interface with a certain threshold

These characteristics are quite typical of what you can gather from a computer vision system — i.e. a camera and the analysis software that goes with it. To illustrate this we built our first apps:

the Terrain Editor app allows users to design 3D landscapes simply by molding the material — it uses state

the Soundscapes app, made with Ircam ISMM team's grain synthesizer technology, enables users in playing with 8 different sound corpora (water, wind, fire, birds, bees, electronics…) — it uses quantity of movement and blobs

the Ribbons drawing app allows users to draw infinite, colourful ribbons simply by moving the moldable material — it uses blobs

Open-source

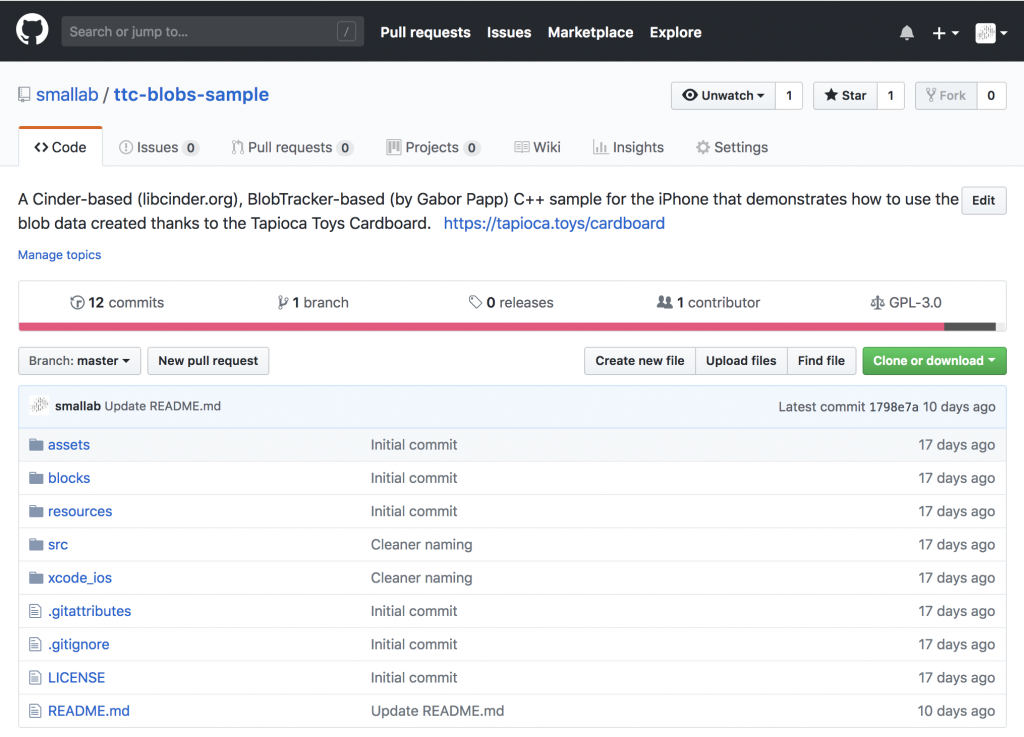

Because it's no use building a new kind of interface if there are no apps made for it, we've started open-sourcing some of our code base in C++ to build powerful apps. Our first sample is based on Cinder — like all our apps — and the BlobTracker block for Cinder by Gabor Papp. It's called TTC Blobs sample and is available on Github of course.

More samples are coming.

Made for creative coders

TTC OSC Blobs Receiver in Java/Processing is available on Github

We've also built an app that shares blob data over the WIFI in OpenSoundControl format: the OSC Blobs app (available on the App Store, like the rest of our software). It allows creative coders in building their own apps without having to dive into C++ in order to use the Cardboard's interaction data. For example, using Processing is a very easy and efficient way to acquire the data in OSC format. Our TTC OSC Blobs Receiver in Java/Processing is available on Github and demonstrates just that.

But why not for Android?

😢 We would love to cover Android. An Android device-compatible version would make things even more accessible to anyone. The issue is that Android phones bear an infinite amount of camera positions and configurations… which we reasonably can't deal with right now. Maybe in the future.

Where to next?

An iPad version is definitely in the works. Most importantly, we need to make more examples, distribute more code samples and organize more workshops. Workshops, workshops, workshops. If you're interested in having us and our interfaces for a workshop for your team or students, wherever you are in the World, we can probably work something out. Contact us at hello@tapioca.toys.

Making-of

Obviously most of our time went into the concept and the design of the device. In order to reach the final iteration, we went through tens of cardboard prototypes, proof-tested several optical ideas, had to get back to our geometry classes and develop new shader skills…

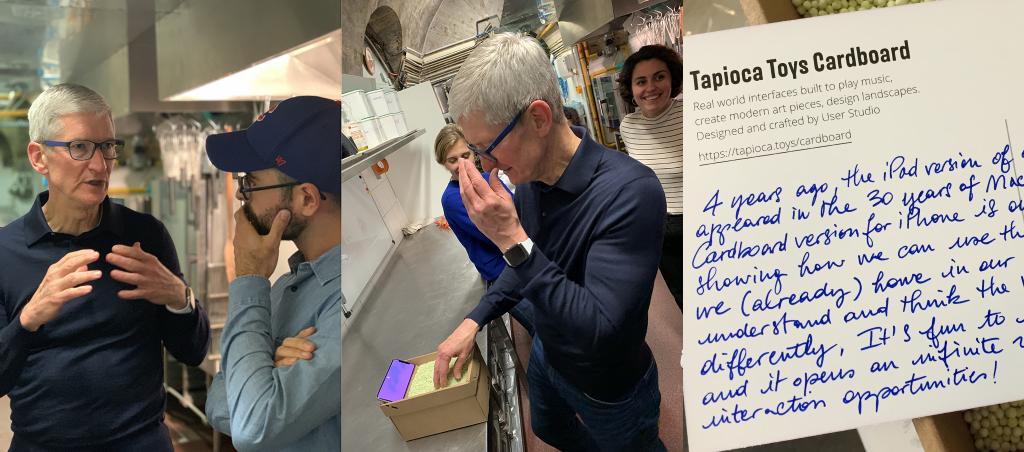

Socially

Yes, you got that right, Tim Cook (Apple's CEO) gave Cardboard a shot and enjoyed it (and yes we were standing in a professional kitchen… but not cooking with tapioca). Tim even remembered the 1.5 seconds we had in the "30 years of Mac" video that got out in 2014.

Stay tuned for more news via the @TapiocaToys Twitter account, or by following the #tapiocatoys hashtag on Instagram.